developing shared npm packages locally

My second post on this blog was about how complicated it seems to be to develop shared npm packages locally. I decided to take another crack at finding a better workflow.

The situation is more or less the same, I have hop, my scss/vue component library which I use in my other projects grasshopper, flow and more. When I need a new feature in a component provided by the library, I want to easily make that change and have its effects visible in real time.

Goal

Let's define what I want when developing app and library at the same time:

- live sync: Changes in the library should be available instantly in the app without manually running commands

- minimally invasive: Switching to "local dev mode" should not include having to modify files that are part of version control. Keep reading to know what I mean by that.

- webpack: It has to work with webpack

- cross env: It would be great if it works across filesystems. Some of my projects are on windows (grasshopper for example), some projects are on linux (WSL or my home lab server) and some projects run npm inside docker

- consistency: it should work in a CI/CD pipeline and when committing a change in the app it should include/reference any changes made in the library

- good dev experience

Existing tools

First lets take a look at what is already there.

npm publish 📦

publishing a new package version after each change and running npm update in the app that uses the library.

This is basically what I currently do, with the slight difference that I do not have to run npm publish each time and I do not use the public npm repository for it.

I have a git webhook that "publishes" a fake version 0.0.<timestamp> to a selfhosted web-instance acting as npm repository. I then point my @codingkiwi/* packages to this repo using .npmrc

| live sync | ❌ manual cli commands |

|---|---|

| minimally invasive | ❌ fake npm versions that only work with the custom registry |

| webpack | ✅ |

| cross env | ✅ |

| consistency | ✅ |

| dev experience | ❌ waittime until fake publish is done, running npm update manually |

npm link 🔗

/projects

app

node_modules

library --> <global folder>/library --> ../../library

libraryexample folder setup

npm link works by running npm link in the library folder, this installs the package globally and creates a symlink from /projects to <npm global folder>/node_modules/library

The second step is to run npm link library in the app, this creates a second symlink from <npm global folder>/node_modules/library to /app/node_modules/library

Another alternative is

$ npm install --no-save <package-path>which creates a symlink without installing the package globally. --no-save is necessary because otherwise the dependency in the package.json gets changed to a file:// dependency.

| live sync | ✅ symlinks are live |

|---|---|

| minimally invasive | ✅ npm link does not change the package.json |

| webpack | (✅) requires configuring resolve.symlinks |

| cross env | ❌ npm link requires you to have app and library on the same filesystem, for docker this would at least require some sort of special configuration |

| consistency | ❌ up to the developer, for example manually publishing a new library version and then updating the app dependency |

| dev experience | ❌ not really.. requires webpack config, without knowing this in beforehand the "module not found" errors would be very confusing there are some issues, make sure to check out this excellent post for more details |

yalc ❓

yalc acts as a local repository to solve problems with symlinks

First you run yalc publish in library which "publishes" the package to the local repo.

Running yalc add/link library in the app folder installs the published content. yalc add modifies the package json to add a file:// dependency but there is also yalc link which creates a symlink instead.

yalc has many more cool features, like automatically pushing a published change to all apps that depend on it and more.

| live sync | ❌ symlinks are live but yalc requires running publish after each change, or using third party tools like nodemon or yalc-watch |

|---|---|

| minimally invasive | ❌ yalc link does not change the package.json but it copies the library to a .yalc folder which needs to be added to .gitignore. Otherwise yalc suggests a precommit hook to prevent you accidentally committing the changed package.json |

| webpack | ✅ worked for me, even without configuring resolve.symlinks if using symlinks |

| cross env | ❌ like npm link, yalc link requires you to have app and library on the same filesystem, for docker this would at least require some sort of special configuration |

| consistency | ❌ up to the developer, for example manually publishing a new library version and updating the app dependency. yalc does say you could also keep .yalc and yalc.lock as part of the repository but this is like committing the node_modules folder |

| dev experience | ❌ I tried it out and had multiple problems running npm install after yalc link removes the link no livesync, as explained above |

monorepos 🐉

Monolithic repositories seem to be growing in popularity, but are also quite controversial. In my opinion they are great if your whole codebase is js, which means you can use tools like lerna or npm workspaces.

The idea is basically this:

monorepo/

library1

library2

app1

library1 --> ../library1

app2

library1 --> ../library1

library2 --> ../library2They bring many advantages but its just not the right tool for me. Throwing Laravel, electron and node apps and js library projects all in one repo seems weird.

What if some packages are public and some are not? Migrating existing projects to a monorepo means either losing git history or working with git submodules.

I know there are more solutions like git subtree (used by symfony for example) or git submodules, but they introduce a whole different set of problems that I do not want to go into too much detail about.

Introducing rune

As you might expect from me by now, this frustration naturally led me to want to code my own solution in nodejs.

File Sync v1

Sadly it is not as easy as simply copying the package project folder over into the target app folder. Some things have to be excluded.

- stuff ignored in the

.gitignorefile, namely the node_modules folder - stuff ignored in the

.npmignorefile

The .npmignore file is an optional override of the .gitignore file, and my first attempt was to take the patterns of the .gitignore and .npmignore files and put them into the ignore list of the file watcher.

I had some problems with this because the .gitignore and .npmignore files, as well as the file watcher, all have different syntax which would require some sort of translation. Also, you are allowed to have ignore files in nested directories, adding to or overriding the rules defined in the parent directories.

My first approach was to use the ignore-walk package, which is also used internally by npm. I used it to create a file list of all the whitelisted files and every time the file watcher reported a change, I checked if the file was on the whitelist. The downside was that every time a new file was added or removed, the whitelist had to be rebuilt.

File Sync v2

My current approach is to assume that everything part of the git repository should be synced, so the flow works like this:

- get the current git ref by running

git revparse HEAD - empty the folder in the target location

target_app/node_modules/package - instruct the app to run

npm i git+http://myrepo/package#refwhich installs the package as git dependency but locked to the commit ref - to detect changes I have the chokidar file watcher that triggers a debounced update method, this method runs

git status -z --untracked-files=allwhich gives me a list of all files that have been added, changed or deleted and even untracked files.- These are the files that differ from the project state at the installed commit ref and have to be synced over

- to have some sort of incremental syncing and to detect all changes I programmed it like this:

- when a package is requested, instruct the app to run the initial npm install

- send over an initial diff based on the git status command, this is the difference between the current project status and the committed status

- save the list of changed / added / deleted files

- when a new file change occurs, run the git status command again but compare the list to the saved list

- if a file was listed as modified and now it isn't we need to send it again to restore it to the initial state

- if a file was listed as deleted and now it isn't, it might have been restored, send it if it exists

- if a file was listed as added and now it isn't, it might have been removed, delete it if it doesn't exist

Why empty the package before running npm install? npm does not "repair" a package or replace its contents if it already exists, at least this is what I observed. I had a modified scss file and even running npm install with the --force flag which should force a re-download did not restore the scss file to its initial state..

Why include the untracked files? When I'm developing, I create new files and don't add them to git immediately, I only add them when the thing I'm doing works and I'm sure the new file should be part of git, but until it is, I want to sync it over to make the development process more fluid.

Webpack and npm

Understandably, webpack assumes that nothing changes in the node_modules folder unless you install or remove packages. This means that changes to the folder are ignored because webpack has cached the contents.

There is a config option for this, managedPaths and the counterpart "unmanagedPaths". I tried adding my package folder to the unmanagedPaths option, it did not work.

What did work was setting the managedPaths option to an empty array, but this of course completely ruins the caching and performance that comes with it. A quick github code search also revealed that apparently nobody on the internet uses it. ^^

By default, webpack assumes that thenode_modulesdirectory, which webpack is inside of, is only modified by a package manager. Hashing and timestamping is skipped fornode_modules. Instead, only the package name and version is used for performance reasons. Symlinks (i. e. npm/yarn link) are fine. Do not edit files in node_modules directly unless you opt-out of this optimization withsnapshot.managedPaths: []. When using Yarn PnP webpack assumes that the yarn cache is immutable (which it usually is). You can opt-out of this optimization withsnapshot.immutablePaths: []

https://github.com/webpack/webpack/issues/11612#issuecomment-705790881

So I experimented a bit, I went into the node_modules folder and began changing and deleting files and folders.

- webpack does trigger a rebuild every time a file that is part of the parent app bundle is modified, but it uses a cached version of the file

- modifying the package.json or node_modules folder inside the package folder can trigger a rebuild and if it is done after a certain idle time it wrecks the build

- moving the package folder also wrecks the build instantly

However, I found a way to notify webpack of the change. How does webpack detect the installation of a new package? The simple answer is that webpack watches the package.json file. I confirmed this by running touch package.json and voila, webpack did a rebuild, with the changes in the files modified by me.

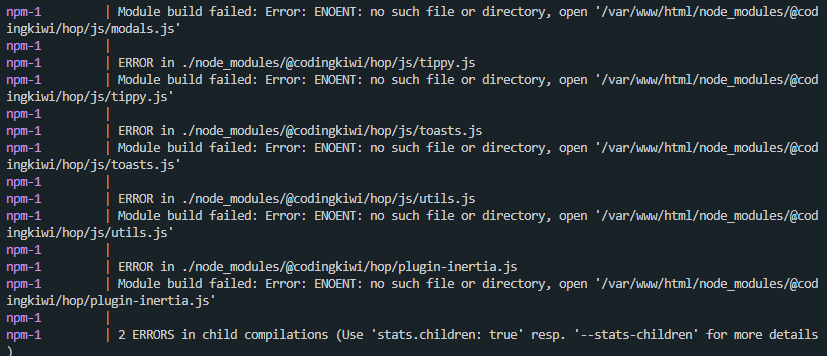

Webpack was not so happy about me ripping away the whole project folder under its feet when doing the initial npm install though:

I tried only deleting all files in the package folder except package.json and node_modules instead of the whole folder, which does not wreck the build but since npm is incredibly lazy/optimized it does not re-install the missing files.

Doing a npm uninstall followed by the npm install worked a few times but is considerably slower. The key takeaway is to clear webpack's cache. So removing the folder, then intentionally wrecking the build by touching the package.json and then doing the npm should work, right? ..nope

Sometimes it automatically recovers from it, sometimes it gets stuck at complaining about missing files even though they do exist, no matter what I do.

The only thing that works is waiting 1 minute, it seems like the folder is on some kind of blacklist because it has too many frequent changes? This might not even be webpack's fault if it is on a system level. Sadly I was not able to find a solution to this yet, so a manual restart of the webpack process is necessary, or simply running webpack after rune.

What I might try out: copy the app's package.json and package-lock.json to a tmp directory, run npm install, copy the package from the tmp dir to the real directory. This might keep webpack alive but it would take very long. (Installing all app dependencies is necessary because npm's algorithm may create node_modules folders inside packages to resolve conflicts, so it needs the "full picture")

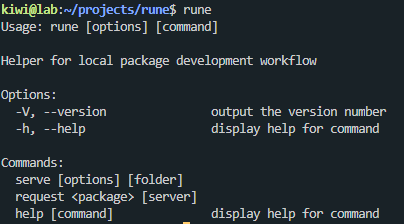

Usage

The tool is provided as a cli command. When you add a "bin" section to the package.json and install the package globally using npm i -g ... it is available everywhere. It has to be installed on every machine that is involved though:

There is the sender and the receiver. I run rune serve . in my projects folder containing the library package(s). This fires up a http server with a websocket.

Running rune request hop or rune request @codingkiwi/hop connects to the server and begins the sync. This assumes sender and receiver are running on the same machine though. The request command accepts an additional parameter where you can specify the url of the server which allows you to run the server basically anywhere.

CLI handling is done by the wonderful commander package:

I will try and improve this for a few more weeks before putting it on npm for everyone to use so stay tuned, I will put the link here.

Update 29.06.2024

This was not as reliable as I thought. I also tried another approach of running npm pack in the package folder and then npm i package.tar.gz --no-save in the target project, which worked to some extent, but at some point webpack would not let go of the cache. It seems like getting the files to the project is not the problem, webpack is the problem.

At some point the npm install of the tar always crashed webpack because apparently it could not find the package.json even though it was there. Because I have webpack running in an auto-restart docker container this kind of works but is very janky.

Update 30.06.2024

Hashing and timestamping is skipped for node_modules. Instead, only the package name and version is used for performance reasons.

It seems like updating the version in the package.json is a more reliable way to trigger a cache refresh. So after running npm pack, I modify the package.json inside the tar and append a random id.

I have no clean solution to webpack crashing yet. My current solution is starting a 10ms interval that continuously runs "touch" on the package.json and stops doing that after the install has completed. As long as the interval is faster than webpacks aggregateTimeout it should work.