SQL Batch inserts are faster

At my current employer a PHP script was used that extracts entries from a large database table, optimizes them and saves them in a second table.

This script was written years ago and it ran every day for 12 hours. Although the general rule "never change a running system" definitely could apply here, I thought that this can be improved for sure.

Lets start with some nerd stats

The "big" table

- 105.923.032 rows

- 908 MB gzipped sql dump

- 8.2 GB unzipped sql dump

- 14 GB database size on disk after import

- 30 minutes import duration

Runtime before optimizing

Due to the special type of data, the script fetches the rows in 800 batches. I let the script run for one of these batches and measured the time

- 823.042 entries optimized to 772.511 entries

- 1h 33min runtime

This clearly shows that my local dev environment is not quite optimal, since the full script would then be running for more than a month, but more about that in the final comparison.

I scattered a few log entries in the code to measure each part of the code which revealed the culprit:

- Selecting the batch data: up to 2s

- optimizing the data: up to 700 ms

- inserting the data in the new table: 90 minutes!

Optimize

To be honest: At the beginning I was quite excited to deep dive into the optimization logic, trying out different approaches and algorithms, but in the end the solution was quite boring.

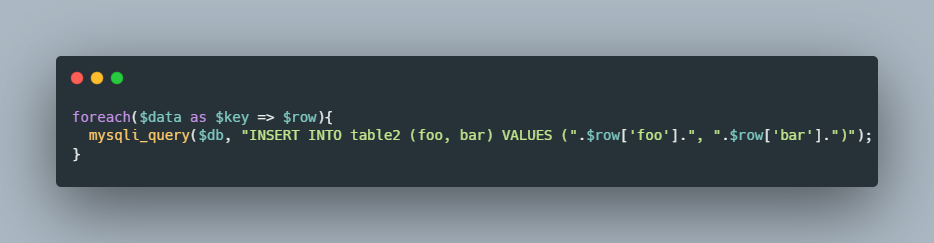

The old script simply looped over the final data, which is 770k entries for batch 1, and inserted them one by one.

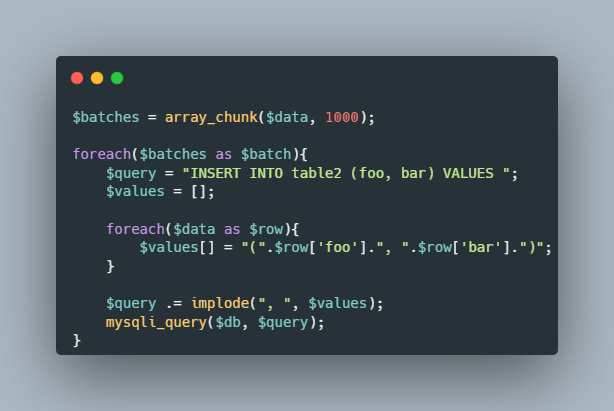

So lets see if a batch insert helps

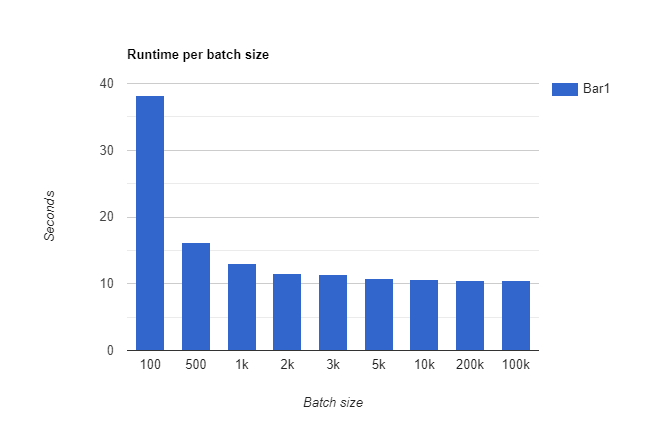

This reduced the runtime of batch 1 from 1h 33 min to 13 seconds!

2 more seconds can be saved by choosing a batch size of 5k instead, resulting in 11 seconds runtime for batch 1

Full run

The full run of all 800 batches took 1771 seconds, or 29 minutes. which is an average runtime of about 2 seconds per batch since they are not all the same size.

The improvement of batch 1 was 5885s * 0.00187 => 11s, so if the production script ran for roughly 12 hours, it should now run in 12 * 60 * 60s * 0.00187 = 81s seconds!

I chose not to touch the optimization code since even a huge improvement that reduces a batch optimization from 700 to 100 ms would only improve the final production runtime by only a few seconds or milliseconds, which is something but not worth the risk at this point to be honest.